AS A DEFENDER OF THE PUBLIC TRUST IN SCIENCE, it never fails to amaze me how otherwise rational individuals completely lose their ability to think critically when it comes to questions of vaccine risk. First, let us establish that vaccines contribute to autism risk. This PACE Law Review by Mary Holland and Louis Conte, which reviewed compensated cases of vaccine-induced brain injury, found 38 instances in which the Special Master’s Court (aka “Vaccine Court”) ruled that vaccines contributed to encephalopathy and encephalitis, which then, according to the rulings, led to autism

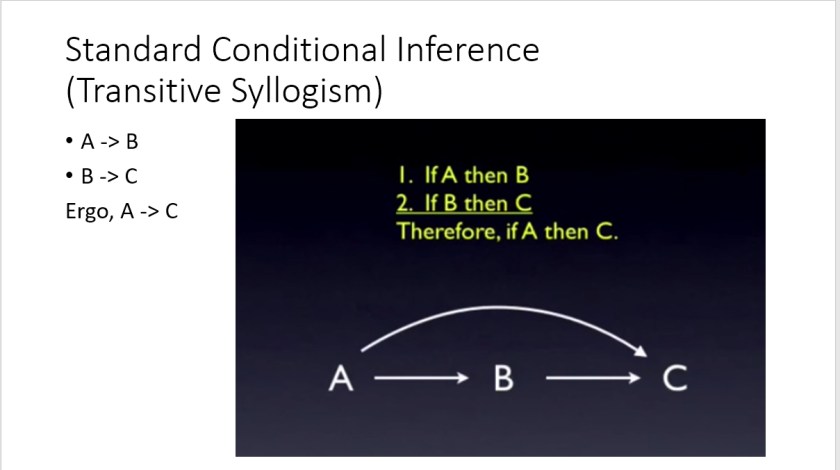

From propositional logic, we can see that this is what is called a transitive syllogism.

Let’s define that.

In propositional logic, a hypothetical syllogism is the name of a valid rule of inference that states that

- If A causes B

- If B causes C

Then A is a cause of C.

A simple (non-conditional, non-hypothetical) transitive syllogisms does not include the “If”‘s, and looks like this:

- A causes B

- B causes C

Therefore, A causes C.

For factor “A” to be listed among the causes of B (and therefore C):

“A” does not have to be an exclusive cause of B (and therefore C).

“A”does not always have to cause B.

There may be some circumstances in which A does not cause B, or some circumstances in which B does not cause C. In those instance, the syllogism is conditional. But it still means that under some conditions, A is a cause of B (and therefore A is cause of C).

It is accepted that vaccines can cause encephalopathy, and according to the Vaccine Court, encephalopathy causes autism. Therefore, the CDC website looks very, very odd:

I have reviewed the flaws in the so-called “science” put forward by CDC that evidently makes them feel that this flaw in logic is sufficient to warrant this violation of first-principle logic.

As I point out in “Causes” (for which I read >2,000 research studies), in this setting, the diagnosis of “Vaccine-Induced Encephalopathy-Mediated Autism” (VIEMA) seems most logically supported for some cases.

So, given this background, I was surprised to see a @PLOS Blog article by Dr. Peter Hotez

In this article, in which is presented as providing”the key scientific papers” refuting the “myth” that vaccines cause autism, Dr. Hotez begins the article by letting the reader know of his “unique perspective” on the issue. Dr. Hotez has a daughter “who has autism and other mental disabilities”. While my heart goes out to his daughter and her family for the struggles this has presented, let us be perfectly clear: his daughter’s condition does not change how propositional logic works, nor does it change any of the factors that may influence the validity of the studies he cites.

The article, published at blogs.plos.org, is part of the larger PLOS publication outlet, which supports open access to science. Here’s a snap PLOS web interface:

I know something about openness; I founded the open-access journal “Cancer Informatics”, as monument to my mom. I fiercely defended that journal and the publisher’s peer-review policy against the corrupting influence of someone (we’ll call him “Prof”) who wanted to turf the enterprise due to jealousy. He told me directly: he was a “fully-tenured professor who didn’t have ‘his own’ journal, what makes me think I can have my own journal?”. “Prof” contacted the publisher (called Libertas Academica at the time) with the ridiculous plan to allow authors to have their own colleagues conduct unblinded reviews, and that the authors then would submit the reviews to the publisher. Giving credit where credit is due, the Publisher rejected the idea, and the journal Cancer Informatics stands as a publishing powerhouse to this day.

I had often admired PLOS for bringing some very interesting and important papers forward. Some of the most insightful and important studies I cited in “Ebola: An Evolving Story” came from PLOS journals.

So when I submitted a comment to Dr. Hotez’ blog outlining the rather obvious flaws in the studies he cited as showing “Why Vaccines Do Not Cause Autism”, which I had read with great interest, I was very surprised to see that the response from @PLOS and @PLOSBlog (and, I presume, from Dr. Hotez) was to turf my comments into “in moderation” purgatory:

Here is a link to my full comments on the studies cited by Dr. Hotez: plos-one-blog-comments-awaiting-moderation

Somewhere along the way, I realized that the title of the article “Why Vaccines Don’t Cause Autism” is a terrible title: it cannot be shown via association or correlation studies. The “Why” begs specific details of mechanisms, not population-level studies conducted to detect a significant differences in rates. For the review of >1,000 studies that show “Why” vaccines may cause autism in some people, visit envgencauses.com.

Anyway, I tweeted Dr. Hotez, and that’s when things became, well, bizarre:

Dr. Hotez’s response?

When dozens of other Vaccine-Risk Aware Americans called upon Dr. Hotez and @PLOS and @PLOS Blog to do the right thing, and publish my critique of the studies, they first balked, and then made a demonstrably false statement:

So, @PLOS and Dr. Hotez, here is your chance to share with us which part of my critique of the studies cited by Dr. Hotez represents “substantial disproven research”. I reproduce my comments here:

———————PLOS ONE COMMENT IN MODERATION——————

Most of the studies cited in this blog post have serious flaws. Their flaws do not mean that vaccines do not provide immunity to some. Therefore, my comments are restricted to the flaws in the study cited.

Jain et al suffers from the obvious issue that parents with an older unvaccinated autistic child are less likely to vaccination (cohort bias)

Gadad et al. is a reboot of an earlier study that did in fact find association until like so many other studies some way was found to make it go away. The difference between the conclusions made in the second manifestation of the publication and the first is a mystery. But the first issue is one of power. The susceptibility to thimerosal leading to autism is only one to 2% How could a study with a dozen or so animals ever hope to detect any effect? Get out at all studies design is only relevant if everyone was at risk of autism from vaccines containing thimerosal clearly everyone is not.

Here is a very thorough consideration of the effect of low-power very likely had on the Gadad study:

http://vaccinepapers.org/gadad-et-al-2015-pnas-journal/

The Taylor meta-analysis’ conclusion is based in part on studies rejected by IOM in 2012 as too flawed to be considered for the report on vaccines/autism link. In fact they rejected 17/22 studies. leaving five studies:

“The committee reviewed 22 studies to evaluate the risk of autism after the administration of MMR vaccine. Twelve studies (Chen et al., 2004; Dales et al., 2001; Fombonne and Chakrabarti, 2001; Fombonne et al., 2006; Geier and Geier, 2004; Honda et al., 2005; Kaye et al., 2001; Makela et al., 2002; Mrozek-Budzyn and Kieltyka, 2008; Steffenburg et al., 2003; Takahashi et al., 2001, 2003) were not considered in the weight of epidemiologic evidence because they provided data from a passive surveillance system lacking an unvaccinated comparison population or an ecological comparison study lacking individual-level data. Five controlled studies (DeStefano et al., 2004; Richler et al., 2006; Schultz et al., 2008; Taylor et al., 2002; Uchiyama et al., 2007) had very serious methodological limitations that precluded their inclusion in this assessment. Taylor et al. (2002) inadequately described the data analysis used to compare autism compounded by serious bowel problems or regression (cases) with autism free of such problems (controls).

DeStefano et al. (2004) and Uchiyama et al. (2007) did not provide sufficient data on whether autism onset or diagnosis preceded or followed MMR vaccination.

The study by Richler et al. (2006) had the potential for recall bias since the age at autism onset was determined using parental interviews, and their data analysis appeared to ignore pair-matching of cases and controls, which could have biased their findings toward the null. Schultz et al. (2008) conducted an Internet-based case-control study and excluded many participants due to missing survey data, which increased the potential for selection and information bias. The five remaining controlled studies (Farrington et al., 2001; Madsen et al., 2002; Mrozek-Budzyn et al., 2010; Smeeth et al., 2004; Taylor et al., 1999) contributed to the weight of epidemiologic evidence and are described below.”

The strong reorganization can have either genetic environmental causes mutations or environmental damage can yield the same phenotype via phenomimicry. Aluminum and mercury prevent astrocytes rom uptaking glutamate, leading to chronic microglial activation and destruction of neural precursor cells and dendrites. Long-range connectivity is altered as well. Thimerosal specifically inhibits ERAP1 – and prevents proper protein shortening leading to protein disorder it is hard to develop a normal brain structures when endoplasmic post translation of protein editing is awry. See https://www.ncbi.nlm.nih.gov/pubmed/27437077

I am very sorry to report that Zerbo et al. has seven major flaws. This is from a contribution of mine to another outlet. Zerbo et al. should be, in my view, retracted giving the very high risk of injury to fetus is that their initial “uncooked” analysis found:

Summary: We describe seven major flaws in the study by Zerbo et al.,. (2016). We conclude that these design and analysis flaws make the recent study of vaccination during pregnancy irrelevant for those most at risk, and even if the results were valid (which they are not), they would only be relevant for the mother/fetus pairs in the general population who are least likely to see autism develop as result of vaccination during pregnancy.

A recent study of 196,929 children born between 2000 and 2010 was conducted to determine whether an association exists between influenza infection and/or vaccination during pregnancy and subsequent ASD diagnosis in the child. Our review of the study found serious design and analysis flaws.

In the initial analysis, the data showed vaccination increased autism risk.

According to the authors,

“The unadjusted proportion of ASD was slightly higher throughout follow-up among children of women who received influenza vaccinations during pregnancy compared with children of unvaccinated women.”

After initially finding an association, they analyzed the data and ultimately dismissed the result as likely due to chance.

Here are the flaws we identified in the study:

Use of Bonferroni Correction for Multiple Hypothesis Testing

The Bonferroni correction is universally recognized as too conservative. It works as follows: If you test one hypothesis, the normal type I error risk is usually 0.05 – that’s called alpha. At alpha = 0.05, it is expected that you will fail to reject a true null hypothesis by chance only 5/100 times. The Bonferroni correction works by dividing that risk among all of the hypotheses, so if you test two hypotheses, you can only accept a risk of 0.025, or 2.5% risk, for each. For three hypotheses, it’s 0.05/3, and so on. This study applied Bonferroni to the main hypothesis considering multiple supposed “confounders” — variables that might (emphasis might) explain a spurious correlation. A statistically significant but unwanted positive association is easy to hide by adding several additional proposed confounders, and treating them as independent “hypotheses”, when they are not, to justify invoking correction of the type 1 error risk for multiple comparisons. One can make a statistically significant finding vanish in a sea of statistical shamwizardy (Lyons-Weiler, 2016).

There are in fact less stringent methods to correct for multiple hypothesis testing, including adaptive methods. But the larger problem is that use of the Bonferroni correction in this manner is also unorthodox. Model selection criteria should be used, not multiple hypothesis testing, because the covariates used are not clearly independent of each other, and model fit is not assessed by corrections for multiple comparisons because the model terms may in fact interact. At the least, variance inflation factors should be reported.

Over-Correction Using Collinear Variables without Objective Model Selection Criteria

The data were “adjusted” with many covariates because they are “known” to be associated with increased risk of autism. In some cases, they treated variables as separate risk factors for autism on their own, when they are obviously associated with risk for autism due to susceptibility to environmental exposures. For example, it has been acknowledged that a mitochondrial genetic variant may increase susceptibility to the toxins in vaccines, eliciting a severe adverse reaction, including autism (e.g., Hannah Poling). In the overall analysis, Zerbo et al. misinterpret what those variables are telling them by ignoring their combined utility in predicting which mothers would have children with autism. Instead, they use the variables to merely assess association. Prediction model performance evaluation should include the model’s sensitivity, specificity, and accuracy, and machine-learning techniques exist that allow one to optimize such predictions using training sets (sets of patients used to learn the model) and test sets (independent sets of patients used to evaluate the model’s performance, and its generalizability).

Failure to Consider and Report Interactions Terms

This flaw is related to #2. Many of the variables treated as “confounders” by Zerbo et al. may instead be useful predictor variables to understand specifically who may be at risk of serious adverse events after vaccination, when used in combination. The variables are potential risk factors or risk indicators of environmental and/or genetic susceptibility that may interact with vaccination. For example, maleness increases susceptibility to mercury injury (Vahter et al., 2007; Camsari C et al., 2016), and thus gender could be a useful predictor variable (in general). Other variables may be even more informative, such as having allergies, asthma, or other autoimmune condition. There is a great deal of overlap between these three conditions, and all indicate an overactive immune system (Chan et al., 2015), and thus each is a potentially useful indicator of increased risk of overreaction to vaccine components (IOM, 2012).

Methods have long existed to assess the degree of independent and combined contribution of such variables, rather than treat them as competing hypotheses. Factoring out variation from covariates leaving residual variation to be “explained” by other covariates is “descriptive statistics”. Testing the utility of the covariates in combination is “prediction modeling”. Both require consideration of the interaction terms, and the interaction terms, when significant, point to (in the case of adverse events or drug efficacy) potentially useful indications that important subgroups may exist within the clinical population being studied. Focus on descriptive statistics is identifiable by reporting p-values (significance) of covariates only, and not reporting the accuracy, sensitivity and specificity of any of the prediction models. Interpretation here is key; integrative prediction modeling yield increased understanding of the contribution of each covariate. We need studies that reveal potentially useful indicators of which patients are least able to tolerate a vaccine.

In 2010, a study entitled, “What’s the best statistic for a simple test of genetic association in a case-control study” (Kuo and Feingold, 2010) concluded that “that the most commonly used approach to handle covariates–modeling covariate main effects but not interactions–is almost never a good idea”.

The same is true of correlative studies of health outcomes, even when they do not consider genetics, especially when the variables considered may be proxy for genetic variation. Both asthma and autoimmunity are thought to, at least in part, have a genetic basis, and that genetic contribution may be related to the genetic component of the familial risk seen in autism. In other words, we need to start looking for the genetic susceptibility subgroup(s) in all future studies on causes of autism. Zerbo et al. failed to objectively compare the predictive performance of alternative models, including those with interactions, and used a weak analysis framework that seems designed to indemnify vaccination during pregnancy. By doing so, they have severely impeded understanding rather than improving it.

The length the analysts went to in torturing the data is telling:

Maternal and infant characteristics, obtained from KPNC prenatal and pediatric electronic medical records and state vital statistics databases, adjusted the measure of association between maternal influenza infection and vaccination during pregnancy and ASD risk in multivariate analyses. Covariates included the child’s sex, calendar conception year (categorical variable), gestational age, maternal prepregnancy body mass index (BMI, calculated as weight in kilograms divided by height in meters squared) (BMI < 18.5 = underweight; 18.5 ≤ BMI < 25 = normal weight; 25 ≤ BMI < 30 = overweight; BMI ≥ 30 = obese), maternal age at delivery (younger than 20, 20 to 24, 25 to 29, 30 to 34, and ≥ 35 years), maternal education at delivery (≤ high school graduate, some college education, college graduate, postgraduate, or unknown), maternal race/ethnicity (Asian, black, white, or other), and gestational diabetes (yes/no). Additional covariates included maternal asthma (yes/no), hypertension (yes/no), autoimmune disease (yes/no), and allergies (yes/no) recorded in the electronic medical record before the conception date. All covariates were chosen a priori because of their association with ASD in previous studies… or because they are indications for influenza vaccination.

We find a major flaw in the use of the concept of “correcting for” maternal asthma and autoimmunity. In addition to the fact that vaccines can cause both asthma and autoimmunity, maternal asthma and autoimmunity are associated with autism. Autism is clearly a complex medical condition that involves both the innate and the adaptive immune system. Because it would be expected that pregnant women with these conditions have high risk, treating these conditions only as independent hypotheses is a serious flaw.

Based on background knowledge, the study is deeply flawed in “correcting for” certain variables, specifically maternal asthma and autoimmunity. First, they are not independent variables. Both maternal asthma and autoimmunity are intimately associated with autism and immune activation (see for example, Lyall et al., 2016). Given the background knowledge supported by science on this question, one would expect to find that the vaccine association is higher in mothers with asthma and autoimmunity. They also mention that risk is highest for children conceived in influenza-heavy months. Their mothers are the most likely to be vaccinated in the first trimester. Those pregnancies should have been compared separately, and, if that were done, separate power calculations should be conducted to ensure that association could be found if it did, in fact, exist.

In technical terms, the use of highly collinear variables (variables that are highly correlated and may not be independent of each other) is called “overfitting the model.” When used in the extreme, such as was done with past, flawed studies on the question of vaccines and autism, the analyst can “pull out” all of the variance (differences) among the patients using multiple, non-independent (redundant) variables, thus leaving only residual, random difference (noise) to be “explained” by the final independent variable (vaccination). The study did not report any of the objective measures of model overfit, and yet each of these factors were used to change variance in the model, and the authors used those changes in the data to dismiss the original connection as “possibly due to chance.”

Dr. Lyons-Weiler’s “Ice Cream Consumption Corrected For Cone Sales” Analogy

An apt analogy would be a study that sought to examine whether there was an association between daytime temperatures and ice cream consumption at ice cream parlors across cities in the US. After collecting both variables (daytime temperature being an independent, or predictor variable; ice cream consumption being the dependent variable), the data analyst decides to “correct for” the variables “consumption of cones” and “trips to ice cream parlors” because they already are suspected to be able to “explain” the volume of ice cream consumption across cities in the US. They clearly could use the sale of cones to predict ice cream consumption, but it would be pointless. Better indicators might be the median daytime temperature, the average amount of expendable income, or the frequency of lactose intolerance. These variables would tend to lead to increased understanding of “ice cream consumption”, and would empower the analyst to predict ice cream sales. “Correcting” the model for the sale of cones, or for the number of trips to ice cream parlors would leave only random variation among customers. They are dependent and collinear variables: as trips to ice cream parlors increase, both the volume of consumed ice cream and the number of cones consumed increases. Use of other, known dependent variables as independent variables, use of highly collinear variables, and model fit are examples of statistical shamwizardry.

These are clever techniques in this setting because the non-specialist (even peer-reviewers) might think they seem appropriate. A give-away is that the functional relationship among variables (dependent/independent) has been perturbed. Other studies have been warped using the same strategy. It took CDC four years to figure this out for the Verstraeten et al. study, so much so, that the lead author sent an email complaining that the association just would not “go away” (Verstraeten, 1999).

Lack of Power Analysis

The study does not report whether their sample sizes were sufficient to achieve rejection of the null hypothesis of no association, if one did, in fact, exist. And the fact that they studied no interactions is telling: such studies require much larger sample sizes. Because power for interactions is not reported, the suitability of the sample size of the study cannot be assessed.

Biased Eligibility

The study’s eligibility restricts the potential relevance of the Zerbo et al. study to parents whose children do not have later-diagnosed conditions on the spectrum. The report: “Eligibility was restricted to singleton children who were born at a gestational age of at least 24 weeks and who remained health plan members until at least 2 years of age (n = 196,929).”

Many cases of ASD are not diagnosed until a child enters school; only severe cases of autism are usually diagnosed at age 2 or 3. Asperger’s and other higher-functioning ASDs and PDDs are diagnosed much later, often not until age 4 or 5, or even later. The Zerbo et al. study results are diluted by including children who left the health plan between the ages of 2 and 5, but who may have received an ASD diagnosis after leaving the health plan.

There may therefore be a large ascertainment bias issue, especially if leaving Kaiser is related to likelihood of later diagnosis as may be the case if parents determine they need a “better” insurance plan, or if a parent loses their job due to having to care for a child with autism.

This study appears to have winnowed the study population down to people with lower risk. That isn’t science; it’s marketing. When assessing risk meant to have implications for the entire population, studies should never deliberately exclude individuals with indictors that might include potentially related risk. That includes genetic mutations.

These people matter.

Anyone who would be given a vaccine in real-world conditions should qualify for inclusion in a study, and it is essential to determine whether, and more importantly how, special risk exists for vulnerable population groups. For example, if women with asthma and other autoimmune conditions who are homozygous for MTHFR C677 are removed from such studies, and an association disappears, that points toward, not away, from causality for a subset of individuals. As one such woman told us, “I shouldn’t be ‘controlled for,’ I should be front and center in the analysis!”

Excluding individuals with mutations that might confer risk should be avoided, too.

These descriptive studies use the weakest of possible multivariate statistical approaches, and badly at that. We need to do predictive science and see if these factors contribute predictive power jointly, or independently, and remain constantly diligent in seeking to understand which population(s) the results of studies like these may be irrelevant for as a direct result of the design of analysis. The public health policies emerging from Zerbo et al. must be restricted to a narrow population, defined by both the eligibility criteria and by the incorrect “correction for” collinear predictive and outcome variables. The study is, as conducted, irrelevant for women with asthma and autoimmunity, and for patients who change health care practices when the child is around the age of 2.5-5 years.

Extreme Cohort Effect

A cohort effect is a variable not included in the study, that changes over time differently between clinical groups, that can be expected to directly influence the dependent variable, and is a type of confounding variable. We find evidence of an extreme cohort effect in the Zerbo et al. study. No major effort existed before 2009 to vaccinate women during pregnancy. This study covers the time period 2000-2010. Most of the vaccinated women are therefore from the later years in the study:

“…vaccination among pregnant women in this cohort (45,231, 23%). Vaccination rates increased from a low of 6% in 2000 to a high of 58% in 2010, and most vaccinated women were older, educated women.”

Flu shots during pregnancy are now accompanied by pressure to accept other vaccines as well. For example, during the study time period, 14% received Tdap during pregnancy. By 2013, Tdap was administered during pregnancy in 41.7% of live births (Kharbanda et al., 2016).

Failure to Report all Serious Adverse Events (SAEs)

The study was narrow in focus and did not consider other SAEs. Rates of spontaneous termination of pregnancies, for example, were not considered; other studies have reported (without note) dramatically increased rates of spontaneous terminations in pregnant women who received flu vaccines (England, 2012).

In sum, the very design of the study, and both the design and the implementation of the analysis approach used in the study, severely limits the utility of the conclusions for the general population.

The effect of factoring out variation in risk contributed by such variables effectively removes women from the study who might be at most risk of serious adverse events due to vaccines. This shifts the focus away from the general population to the subset of the population who are not likely to have SAE’s. In the roll-out of news stories about this study, this critical detail is completely lost, and in clinical translation, the incorrect, and potentially unwarranted and non-sequitur conclusion that “vaccines during pregnancy do not contribute to autism risk” is thereby grossly misleading. Second, even if the results were not flawed, the changes in the vaccination protocol during and since the study makes the results irrelevant for the current population of pregnant women in the US.

This study’s initial results showing an increase in autism in the vaccinated group should be heeded as a warning to prospective parents. The logic of vaccine safety science is contorted and twisted. Several flu vaccine formulations still contain thimerosal. We know from first principles that thimerosal is a toxin; it shuts down the expression of the protein ERAP1, and causes widespread problems with proper protein truncation (Stamogiannos et al., 2016). We call for an independent reanalysis of the findings using appropriate modeling, and a retraction of the current publication.

References

Camsari C et al., 2016. Effects of periconception cadmium and mercury co-administration to mice on indices of chronic disease in male offspring at maturity. Environ Health Perspect.

Chan SK, Gelfand EW. 2015. Primary Immunodeficiency Masquerading as Allergic Disease. Immunol Allergy Clin North Am. 35(4):767-78. doi: 10.1016/j.iac.2015.07.008.

England, C. 2012. 4,250% Increase in Fetal Deaths Reported to VAERS After Flu Shot Given to Pregnant Women https://vactruth.com/2012/11/23/flu-shot-spikes-fetal-death/

Kharbanda EO et al., 2016. Maternal Tdap vaccination: Coverage and acute safety outcomes in the vaccine safety datalink, 2007-2013. Vaccine. 34(7):968-73. doi: 10.1016/j.vaccine.2015.12.046.

Kuo CL, Feingold E. 2010. What’s the best statistic for a simple test of genetic association in a case-control study? Genet Epidemiol. 34(3):246-53. doi: 10.1002/gepi.20455.

Lyall K J et al., 2016. Maternal immune-mediated conditions, autism spectrum disorders, and developmental delay. Autism Dev Disord. 44(7):1546-55. doi: 10.1007/s10803-013-2017-2.

Lyons-Weiler, J. 2016. Cures vs. Profits: Successes in Translational Medicine. World Scientific.

Vahter M et al., 2007. Gender differences in the disposition and toxicity of metals. Environ Res. 2007 May;104(1):85-95.

Verstraeten, T. “It just won’t go away” email to Robert Davis and Frank DeStefano, Dec 17, 1999

Stamogiannos A et al., (2016). Screening Identifies Thimerosal as a Selective Inhibitor of Endoplasmic Reticulum Aminopeptidase 1. ACS Med Chem Lett. 7(7):681-5. doi: 10.1021/acsmedchemlett.6b00084.

—————– END OF COMMENTS “IN MODERATION”——————–

Postscript:

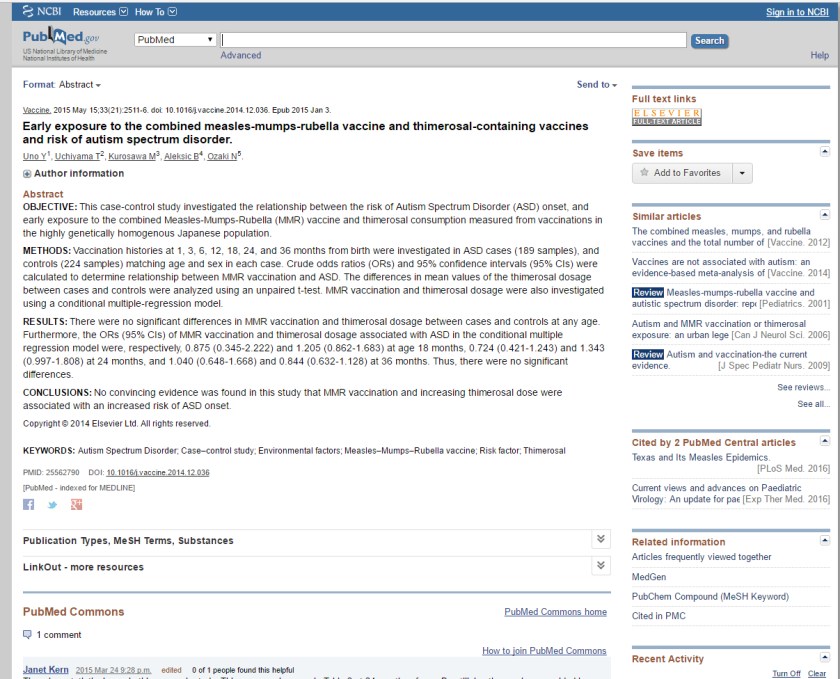

The one of the studies cited by Dr. Hotez (Uno et al.) that I did NOT criticize in my comment is already under fire after publication for CHANGING DATA AFTER PUBLICATION. See the comment at the bottom of the Pubmed entry?

Here is the text of the comment:

“Janet Kern 2015 Mar 24 9:28 p.m.edited 0 of 1 people found this helpful

There is a statistical error in this research study. This error can be seen in Table 2 at 24 months of age. By utilizing the numbers provided in Table 2 (see below) it is evident that the difference between cases and controls at 24 months is highly statistically significant. The journal, Vaccine, was notified of the error. However, since, to date, no clarification has been issued, it is important to note that the conclusions seen in the abstract above are misleading and are the opposite of the conclusion supported by the data. The corrected results indicate that there is a statistically significant relationship between Thimerosal exposure and autism spectrum disorder.

***** At 24 months from the data provided using a t-test reveals the following:

Unpaired t test Mean of * sample 1 from summary = 804.2 (n = 189) Mean of * sample 2 from summary = 632.1 (n = 224)

Assuming equal variances Combined standard error = 71.838701 df = 411 t = 2.395645 One sided P = 0.0085 Two sided P = 0.017 95% confidence interval for difference between means = 30.882882 to 313.317118 Power (for 5% significance) = 90.07%

Assuming unequal variances Combined standard error = 72.061016 df = 394.166765 t(d) = 2.388254 One sided P = 0.0087 Two sided P = 0.0174 95% confidence interval for difference between means = 30.445864 to 313.754136 Power (for 5% significance) = 66.35%

Update 5/24/2015: When the journal Vaccine was notified of the error, it notified the authors. In response to the notification of the error, the authors changed the numbers in Table 2 of their study. The authors changed the mean and standard deviation for the controls at 24 months from 632.1 (715.1) to 676.8 (719.5). No explanation for the error or justification for the change was given.

To date, the journal Vaccine and the study authors have refused to release the study dataset for further evaluation.”

ALL of the “key studies” cited by Hotez are flawed, some of them quite seriously.

I also learned of this piece of information, not disclosed by Dr. Hotez:

WELCOME TO VACCINE-RISK AWARE AMERICA. jlw

NB: The section of the critique of Zerbo et al. originally appeared earlier this year published with co-authors simultaneously at

and

Well done. If they delete your comments, do as you have done, reprint the lot anyway. Seems PLOS now suffers from the paid brigade?

Is this the same Hotez that wants to force parents to vaccinate their child?

https://www.seattletimes.com/opinion/anti-vaccine-misinformation-denies-childrens-rights/